What is Docker?

Docker is a platform for developers and sysadmins to develop, deploy, and run applications with containers.

The official description of Docker… but what is a ‘container’ then? Well, in essence it is a ‘packaged’ application build from a ‘blue print’ image that run on top of the docker engine while providing us all the functionality it would if manually installed.

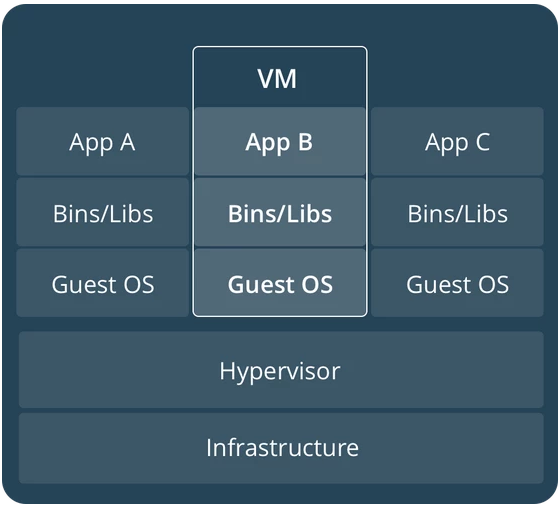

So what is the difference from that other thing they thought us about before to run multiple application on a single host? They called it ‘virtualization’ of application(s) via ‘Virtual Machine’ images? Well, in this regard Docker containers are the next generation of that exact idea.

When we create a Virtual Machine (VM) to distribute our application in, we need to install a guest operation system, then install the software we need to run our application, for example Tomcat. We need to configure the Tomcat server and then deploy our application on top it. We then save a copy of this image somewhere and hand that preconfigured image to a client, so he can run that image with our application on it on his hardware machine(s). In short we get something like the following image shows if we deploy multiple VM’s on a single hardware instance.

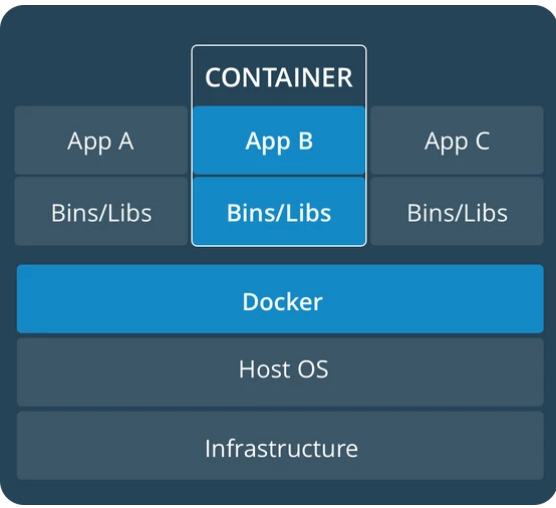

Now if we would do the same for Docker, we would create a base docker image, but this would only consist of the Tomcat server we require along with the needed configuration for it and our application. Meaning we would not have to bother with running a Operating System for our docker container. All the functionality that would have been provided by the Docker Engine directly. So if we deploy multiple docker containers to a single hardware instance we would get something like this.

So we get the benefit of running multiple application on a single hardware instance without the overhead of having the provided a guest operating system for each application we deploy. Meaning we could deploy more application on the same hardware with Docker. This while still having a clear separation between all the different applications being run.

So how to we get this Docker thing installed so we can try this out?

Well depending on your favourite operation system you will have to jump through more or less hoops. So the jumping instructions can be found at the following places:

Windows: https://docs.docker.com/docker-for-windows/install/

Mac: https://docs.docker.com/docker-for-mac/install/

Linux: … Well …, which distro do you use? Debian? Ubuntu? CentOS? Fedora? Or something else?

Getting your first container up and running

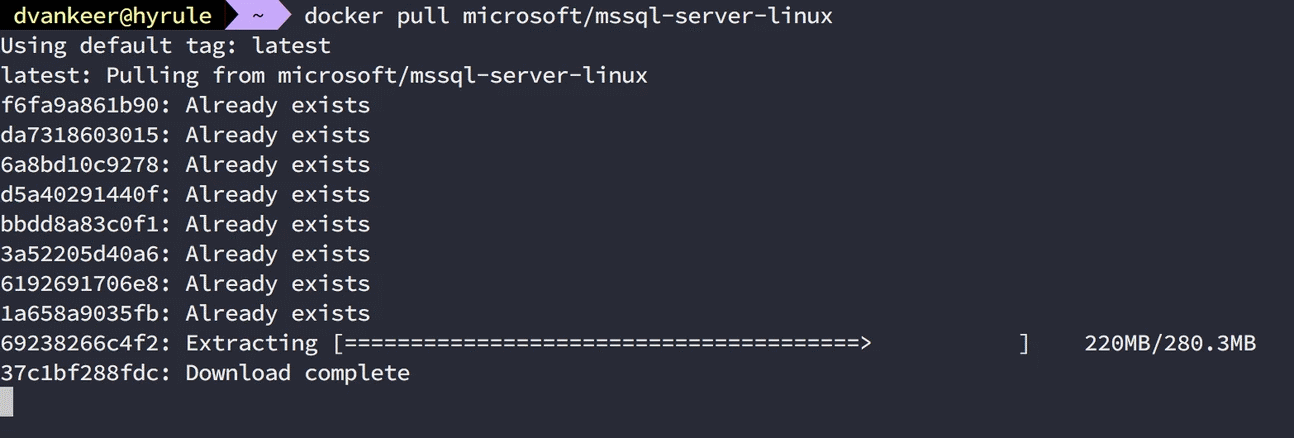

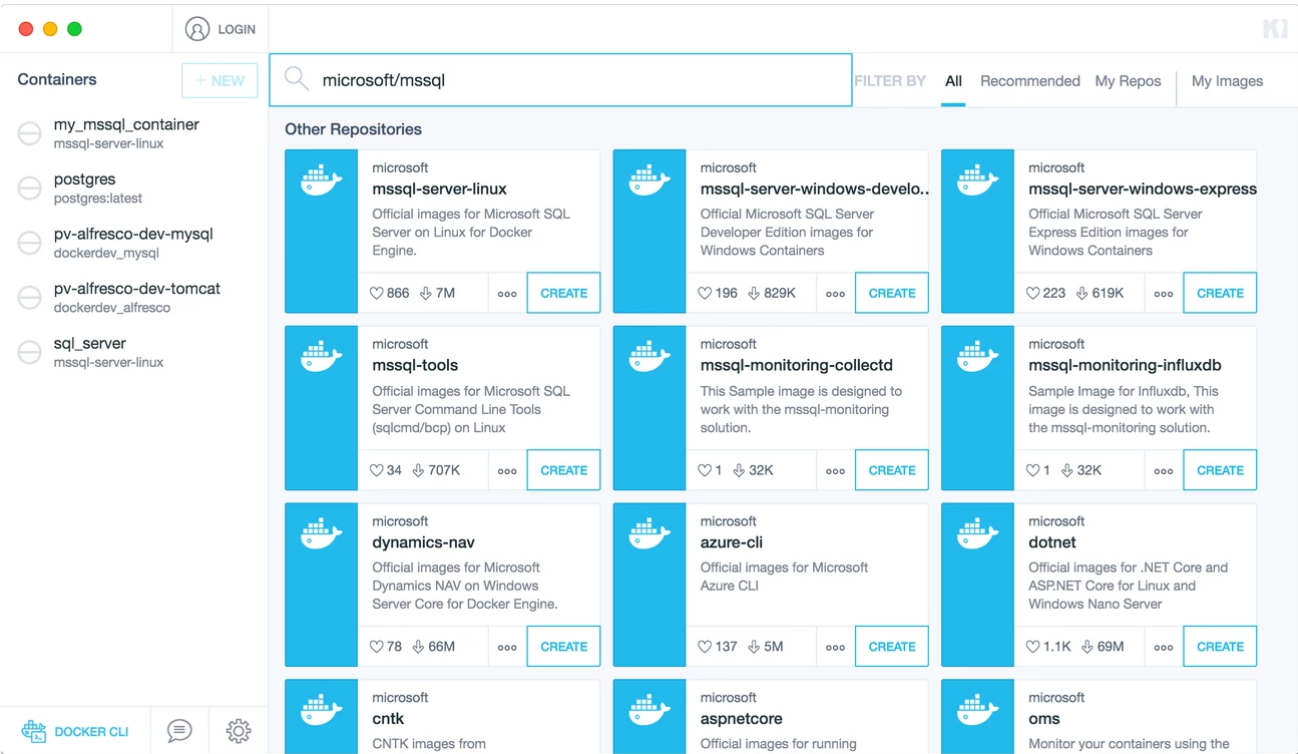

Ok, so now we have Docker on our machine, how can we get started? We do that by ‘pulling’ down the docker image of a container we want to run. This can be done via the command line with ‘docker pull ‘. Wait…, how do we find the ‘image_name’ of something i want to run a container of? Well, most of the time you will try to search for it via docker hub website or the build-in search of docker itself via ‘docker search ‘ command. In this tutorial we are going to use the MS SQL server image for linux, it’s name is ‘microsoft/mssql-server-linux‘.

docker search mssqldocker pull microsoft/mssql-server-linux |

Once you pull down a image you wil notice a number of simultaneous downloads of different parts of the docker image, these parts are called ‘layers’ . Each docker image consist of a number of them and each of of them is a bundling of files (in ‘read-only’ mode). All of these layers are combined on top of each other to form your container. One small additional layer is added on top of these layers when you run them as a container, this being a thin writeable layer in which any changes you make to the running container are stored. (This also means if you destroy your container, all changes/configuration/logs/data/… go to /dev/null a.k.a. are destroyed as well)

Each image (and it’s read-only layers) have their own sha-256 which unique identifier it. These layers also make it possible for multiple containers to reuse the same basic binary/lib layers when using a image. This help save disc space on your machine (until we get nice affordable giant size SSD’s ![]() ) and also saves on bandwidth, since a layer you already downloaded for a previous docker pull will not be downloaded again.

) and also saves on bandwidth, since a layer you already downloaded for a previous docker pull will not be downloaded again.

Now that we have downloaded the image it’s stored on our machine along all other images, to get a list of all images available to run we use the ‘docker images‘ command to get a overview of them. Off course we have some tools available to manage all these images on our machine.

Ok, time to run our first docker container from the image we have downloaded. This can be done by directly running it with ‘docker run ‘ command which will create a new container for u (with a random name generate name like ‘wonderful_euclid’ , or you intermediately build the container yourself with ‘docker create –name ‘ and then run it with ‘docker start ‘.

docker create --name my_mssql_container microsoft/mssql-server-linuxdocker start my_mssql_container |

Congratulations, you have just created and run your first docker container.

Interacting with the container

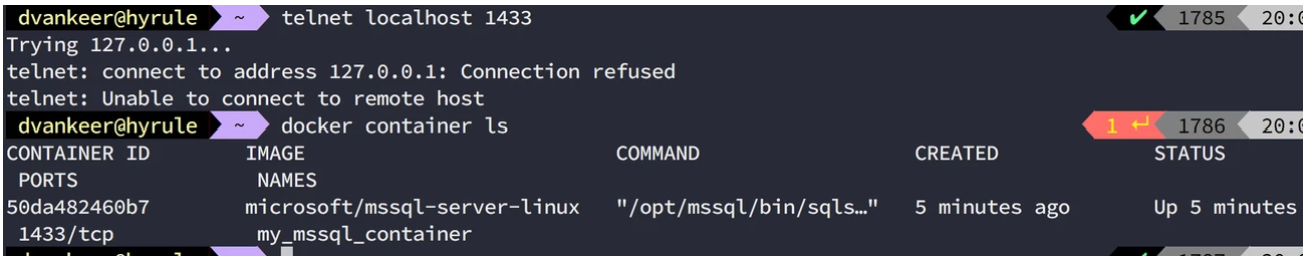

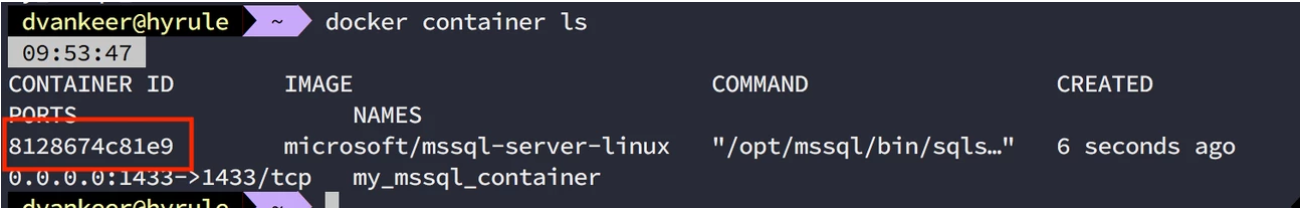

Now that we have the container create and started we can list all running container with the ‘docker container ls‘ or ‘docker ps‘ command.

docker container lsdocker ps |

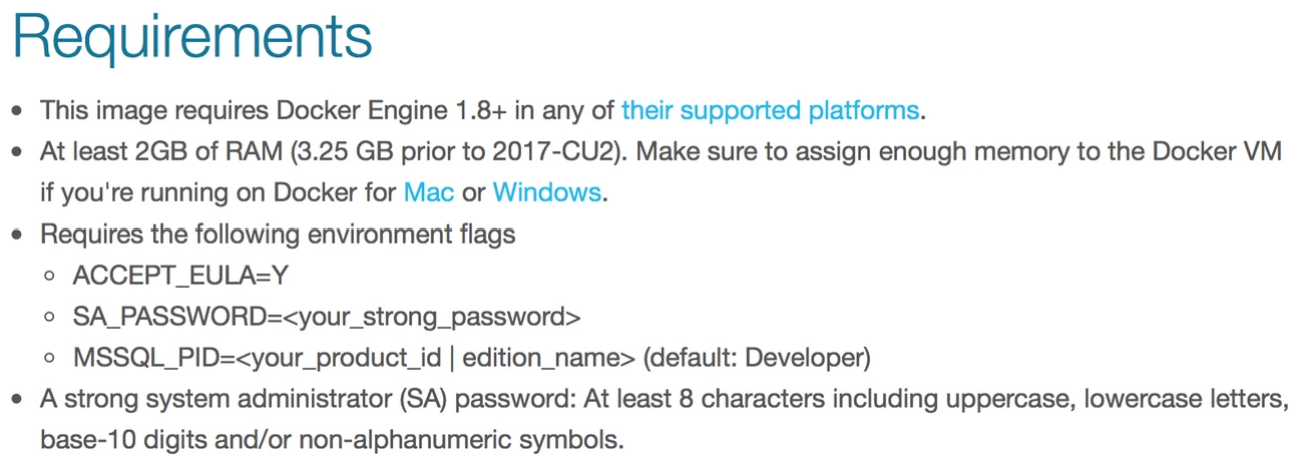

You will notice the container is up and running… or maybe not. It seems our container starts and shuts down again a bit later. Ow, did we brake something? PANIC! What happened? Let see if we can find out what is going wrong. What do we normally do when things are not working as intended? Indeed we look at the logs, but where are they stored? No need to search for them.. docker helps you on this as well. With the command ‘docker container logs ‘ we can get access to the logs. And low and behold… it seems we need to accept a EULA. Ah right, the docker hub page for the MS SQL server mentions that under the requirements section along with some others to make sure that the container would work properly.

Ah! We need a number of environment flags to be passed to the container, so it knows that we accepted the EULA (yeah, those things again, lawyer speak available here). As always we just nod our head and say ‘yeah sure’. We also need to provide a ‘strong’ password for the SA account. But at least it provides a sensible default for which edition to run, for our usage the default of developer edition is good enough. (Reminder: any other edition you will need a valid license!).

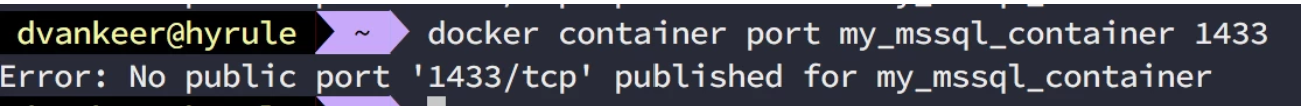

So how do we pass these environment flags to the container? Let’s she what options we have when we create a container. This can be done by inspecting the docker command by adding –help ( this can be used for most commands with docker, so try it out! ) So we would run ‘docker create –help‘. … What?! So much text…, let see. Ah, here we go… we can use a parameter ‘-e’ or ‘–env’ to set environment variables. So the create our container we would use ‘docker create –name -e ‘ACCEPT_EULA=Y’ -e ‘SA_PASSWORD=’ microsoft/mssql-server-linux‘.

docker create --helpdocker create --name my_mssql_container -e 'ACCEPT_EULA=Y' -e 'SA_PASSWORD=Qwerty_123!' microsoft/mssql-server-linux |

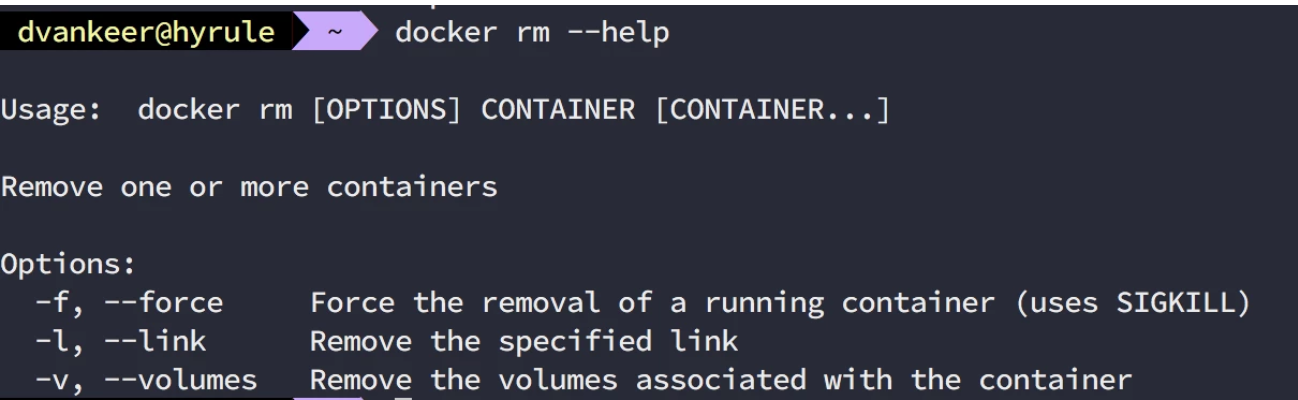

Oh well, that did not go as expected. Now how do we get rid of that previous container we created… –help!

docker --helpdocker rm --help |

So we remove the container with ‘docker rm ‘ and create the new one with environment settings once more. If all goes well our container now stays up and running.. Let’s connect to our sparkling new SQL database server.

What?! Not running at all? It thought that container was up and running? Well, our container is up, but we seem to not be able to access the server port? It mentions the port on the ‘docker container ls’ output? We must have missed something, let’s check. It seems we can verify the container port configuration via ‘docker container port‘ command, which support inspecting of a port number itself.

docker container --helpdocker container port my_mssql_container 1433 |

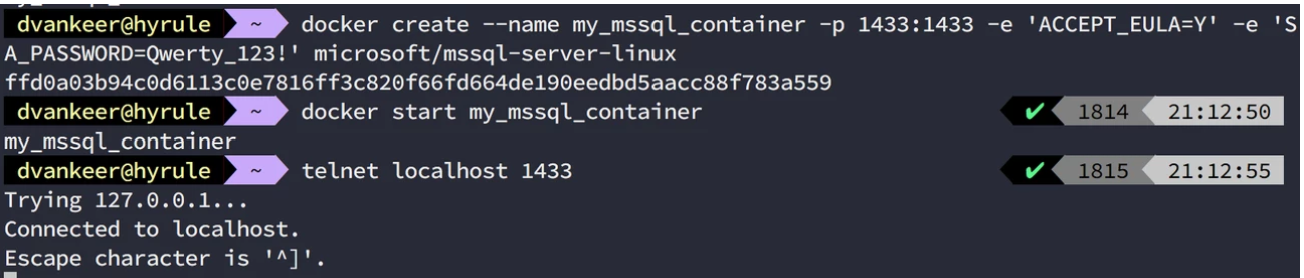

We seem to not have published the port from our container. How do we do this? Once more, –help to the rescue. Ok, it seems we can provide a port mapping when we create the container by using the -p or publish flag, it’s does not really provide a good clue on how that to use it, but the syntax is ‘host_port:docker_port‘. So once more we remove our container, to create a new one with a extra options added. The create command becomes ‘docker create –name -p : -e ‘ACCEPT_EULA=Y’ -e ‘SA_PASSWORD=’ microsoft/mssql-server-linux‘.

docker rm my_mssql_containerdocker create --name my_mssql_container -p 1433:1433 -e 'ACCEPT_EULA=Y' -e 'SA_PASSWORD=Qwerty_123!' microsoft/mssql-server-linux |

And behold our first fully functional container. As mention above we can also use the run command with the same options to get the container create for us with a random name. But this covers the basics of docker usage to get your hands dirty with Docker. A handy reference or so called Cheat sheet of docker commands is available for you to use.

But dear sir, i don’t like all those command line thingies, i rather have something more visual to do all this? Isn’t there a option to do so? There is Kitematic (which is still in beta) which allows you to perform all of these in a GUI fashion. It should be bundled with your Docker CE installation (at least it is on the Mac).

Running a specific version

With all the previous work we have always used the ‘latest’ version of the MS SQL linux server images, but what if we don’t want this latest and greatest version but we want to stick something more specific. Well this can be done, because each image has a so called ‘tag‘. So if the publisher of the image has added a specific tag to a image we can use that specific version instead. How can we use a certain tag? Well it can be done pretty easily by just adding ‘:<tag_name>‘ to the image you want to use.

The docker CLI unfortunately does not provide us with a list of tags for a image ourselves (although their registry API supports this), so for now you can either just look at the image tag tab on the docker hub. For example the tags of MS SQL server linux are located at https://hub.docker.com/r/microsoft/mssql-server-linux/tags/

Peeking inside ... a running container

Executing a program in a running container can be done by using the ‘docker exec -it ‘ command to run a executable. Where the ‘container id‘ can be found by looking at the output of the ‘docker container ls‘ command. You don’t have to type in the full container ID, you just need to make sure you uniquely identify the container in all running containers. In our case we have only one running container. So just giving ‘8‘ as container id would be sufficient to unique identify it. The container itself is still running it’s entry point command (a.k.a. what is run when the container starts) we ask a interact activebash terminal (or powershell for window container).

docker container lsdocker exec -it /bin/bash |

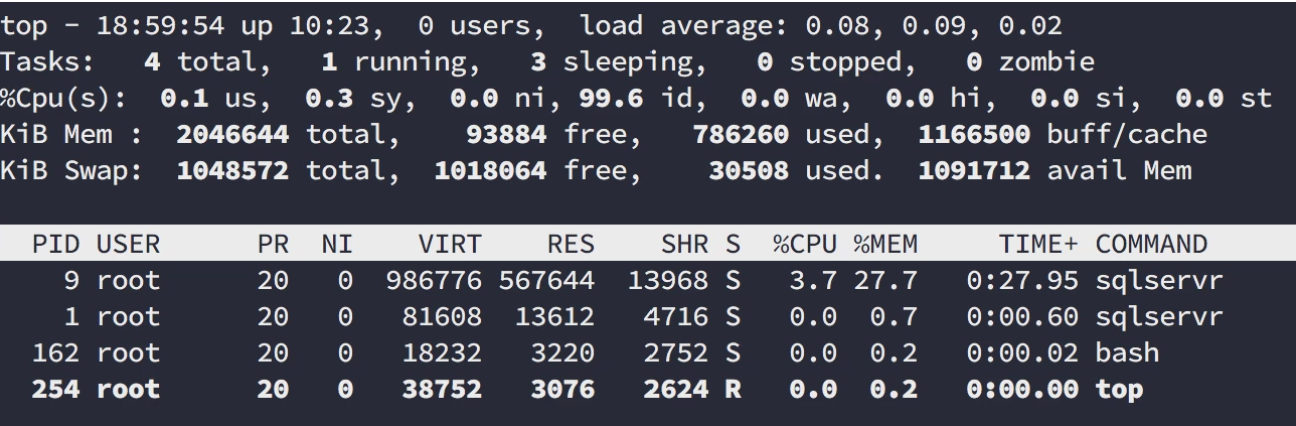

In our case we want to access the CLI so we can simple pass ‘bash’ as the executable name. Any executable that is on the configured ‘path’ of the container can be invoked directly, if not you need to supply the full path name to the executable. This allows us the manipulate the container like we do any regular linux system.

It also allows us the show you that container only run the tasks they need to run, we have 2 ‘sqlservr’ tasks up and the bash instance we started with our top command running. Easy to keep an overview ![]() .

.

Peeking inside ... a stopped container

We can also take a look into a stopped container. This allows us to look around the filesystem to determine a cause for a container which could exist prematurely or not start for some reason without providing us much information via the ‘docker container logs <container_name>‘ command. This can be done by taking a look at the container listing we receive by running ‘docker ps -a’, the -a flag will provide us with a listing of all containers including the inactive ones.

With this list we can create a new image based on the contains of a existing container (with all changes which have been made to it) via the ‘docker commit <container_id> <image_name>‘ command. Then we can start this container with a new entry point (which replaces the defaiult entry point of the container) via the ‘docker run -ti –entrypoint=<command_to_start> <image_name>’ which allows us the provide ‘bash‘ as the new entry point (instead of the default command being executed as entry point) to drop us into a CLI environment where we can inspect/determine what is going wrong with a container. (So in our example instead of having the MS SQL container run its sqlserv command, we request to start bash as entry point, so when looking at running processes inside the container, we do not see any sqlserv running, just our bash)

docker commit <container_id_of_stopped_container_to_peek_into> <image_name_to_peek_into>docker run -ti --entrypoint=/bin/bash <image_name_to_peek_into>' |

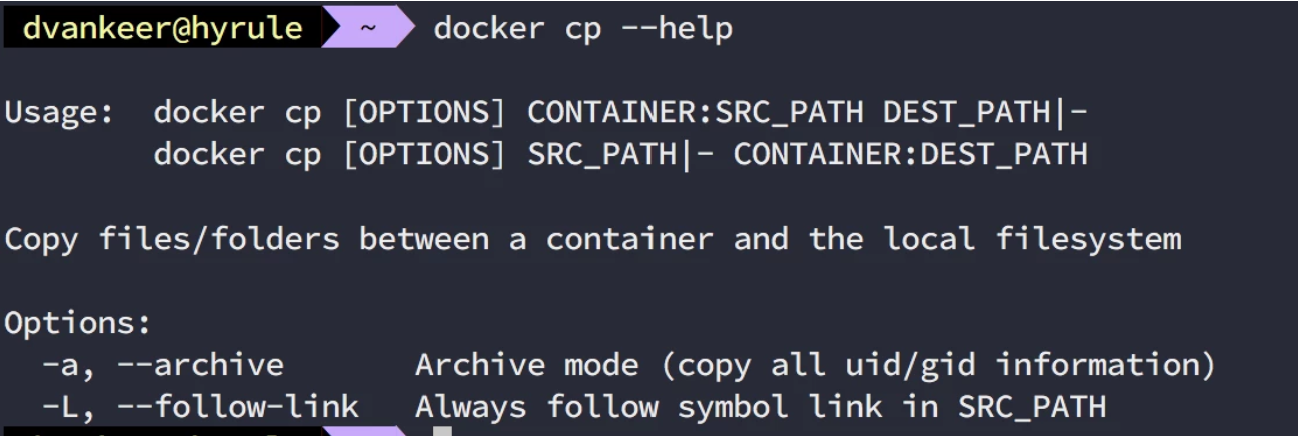

Transfer files from and to a container

You have your favourite application running in a container, but you need to make a change to the configuration. You can do perform the change in the running container by using the CLI like we just discussed, but the creator of the container forget to provide you favourite text editor (… let the Editor war commence). We could go jumping through all kinds of hoops to get your favourite editor installed. Only to see it vanish once the container gets destroyed. Let us opt for a more sensible approach of editing the file on your host machine instead. This requires us the be able to get files out and into the container some way. That support is build in with the ‘docker cp‘ command.

So to retrieve the error logs from the MS SQL container we created we would use ‘docker cp : ‘ and to get a file from our host machine to the container we would use the reverse of the form, as in ‘docker cp :’.

Managing container data

So with our MS SQL container we have set up, the data of it’s database is under the /var/opt/mssql directory. Each time we destroy our container we loose all the data which we have accumulated over time. This is fine for a development environment, we can easily start with a clean database and build it back up from our deploy scripts. But now we want to move our container into a test environment where we do not want our data be destroyed each time we destroy/create a new container. We want to store our data in a more persistent manner. How can we do this? Well, there a 2 possibilities in Docker, namely ‘bind mounts‘ and ‘volumes‘.

Bind mount

With a ‘bind mount‘ we essentially mount a file or directory from the host machine on the docker container. So we provide access to a particular part of the host to our docker container. Both the container and the host can manipulate the files contained within the mount at the same time time. So if we want the entire /var/opt/mssql directory to not be written inside the container but onto the host we would use mount a host directory via ‘docker create –mount type=bind,source=<local_directory>,target=<container_directory> –name <container_name> -e ‘ACCEPT_EULA=Y’ -e ‘SA_PASSWORD=<password>’ -p 1433:1433 microsoft/mssql-server-linux’ (Note: due to a technical issue this cannot be done on a Mac for the ‘MS SQL data’ directory).

Volume

Unlike a bind mount a volume is independent from the host OS and is also managed by Docker itself. They can also be shared between ‘linux’ and ‘windows’ containers because they are not dependent on the host filesystem. Before we can use a volume with a container, we first need to create it. This can easily be done with the ‘docker volume create <volume_name>‘. This now empty volume can be mounted on a container or use when creating a new container ‘docker create –mount source=<volume_name>,target=<container_directory> –name <container_name> -e ‘ACCEPT_EULA=Y’ -e ‘SA_PASSWORD=<password>’ -p 1433:1433 microsoft/mssql-server-linux’.

With a bound mount we have acces to the data directly to manipulate it, but how do we get data in the volume mount? Well, this can only be done by attaching it to a container. and transferring files to the container itself like we discussed before. This can be done via running a existing container with the volume mount. Once you run the container with the volume mount on it, the contents of the original directory is automagically copied over into the volume mount. If needed a helper container like busybox can be used and we can then copy the files from our local machine into the container like we discussed before.

docker run --mount source=<data_volume_name>,target=<container_directory> --name helper busybox truedocker cp <local_path> helper:/<container_directory>docker rm helper |

Windows containers and volume support

Windows docker containers do NOT support mounting a bind or volumes onto a non-empty directory or the C: drive itself.

Using a private Docker registry

What makes docker so handy it the availability of images for all kind of tasks. The main repository which we have been using is the ‘docker hub registry‘. Most images you ‘pull’ will come from this main docker registry. But like all parts of docker we can create our own registry to host private images (for example for our clients). So how can we use a different registry to pull our own private images from? Let’s first start by analysing how the ‘docker pull’ command works. When we for example perform a ‘docker pull ubuntu‘, it is actually a shortcut for a longer command, namely: ‘docker pull docker.io/library/ubuntu’. Where docker.io/library is the main docker registry we have been using all the time. So how do we get a image from our own private registry (on for example AWS)? First the administrator need to configure the repository and populate it with the images we need. Then i just becomes a simple case of prefixing the hostname of the registry in front of the image we want to pull. The docker pull command would become something like: ‘docker pull <private_registry_domain>:<port_number>/foo/<image_name>‘.

# Linux/Mac OS: login credentialsaws ecr get-login --no-include-email# Windows: login credentialsInvoke-Expression -Command (Get-ECRLoginCommand -Region eu-west-1).Command# Authenticate docker on Amazon ECR (the aws eco login command will output the docker login command, in the form below)docker login -u AWS -p <password> https://<aws_account_id>.dkr.ecr.eu-west-1.amazonaws.com# Pull a existing imagedocker pull <aws_account_id>.dkr.ecr.eu-west-1.amazonaws.com/<your_image_name>:<tag># Tag and push local imagedocker tag <local_image_id> <aws_account_id>.dkr.ecr.eu-west-1.amazonaws.com/<your_image_name>:<tag>docker push <aws_account_id>.dkr.ecr.eu-west-1.amazonaws.com/<your_image_name>:<tag> |

Creating your first docker image

Now that we have learned on how to use docker and manipulate it a bit, it is time to see how we can create our very own image.

All docker images are made with the usage of a file called the ‘Dockerfile‘. This file consist of a number of build action which have to be performed in order to reach the final image we want. A sample Dockerfile for a .net Core and java based images:

FROM microsoft/dotnet:2.1-runtimeWORKDIR /appCOPY <local_build_directory_project> ./ENTRYPOINT ["dotnet", "dotnetapp.dll"] |

FROM openjdk:10-slim (or alpine variant when it gets released for 10)WORKDIR /usr/src/myappCOPY <local_build_java_project> ./ENTRYPOINT ["java", "Main"] |

So what does these Dockerfile’s attempt to do?

First it uses the microsoft .net core 2.1 runtime for the .Net image and openJDK 10 for the java image as a starting point with the usage of the FROM keyword. Each DockerFile must start with this keyword and the image you want to use. (If you want to start from nothing you can use the special SCRATCH keyword which allows you to start from nothing). This initializes the build stage of the docker image. So with this FROM keyword you select the ‘base’ docker image you want and extend onto that image, meaning that any docker image that already exists in the world can be extended upon to customise it.

Then it performs a COPY of a locally build .net Core/java project into the selected WORKDIR of ‘/app’ for .net and ‘/usr/src/myapp’ for java. The WORKDIR command allows use the use . as a shortcut to the selected WORKDIR.

And last but not least, the most important part is the ENTRYPOINT. This is the command that will be executed when the container (which has been created from this image) starts. So in this example it starts our .net/java application itself.

Build the image

Now that we have a basic DockerFile to build a image with, we need to actually bake it into a image. This can be done with the ‘docker build <path_to_DockerFile>‘. The result would be a new image with a image_id being placed in our local image store. If you want it to have a more friendly name you do so with ‘docker build -t <friendlier_image_name> <path_to_DockerFile>‘.

docker build <path_to_your_DockerFile>docker build -t <friendly_image_name> <path_to_your_DockerFile> |

So if we now would run the image we would start our ‘dotnetapp.dll’ or ‘Main’ application with the runtime we started of with the already build application would run in a container. But is would not be very handy, since it runs the application itself, but exposes nothing to the outside.

Exposing services

Now that we have our basic docker image, we want it extend it usefulness by exposing any services it would provide to the outside (Let’s pretend both our applications provide some web server on port 80).

This can be done with the EXPOSE keyword. Doing so would make a specific container port available for mapping to the docker host. So lets update our .net Core/java application DockerFile to expose port 80 for usage, once this has been done a user of the container can then publish this container port onto the docker host in whichever way he wants.

FROM microsoft/dotnet:2.1-runtimeEXPOSE 80 # Exposes http portWORKDIR /appCOPY <local_build_directory_project> ./ENTRYPOINT ["dotnet", "dotnetapp.dll"] |

FROM openjdk:10-slim (or alpine variant when it gets released for 10)EXPOSE 80 443 # Exposes both http and https portsWORKDIR /usr/src/myappCOPY <local_build_java_project> ./ENTRYPOINT ["java", "Main"] |

Build and environment arguments

Sometimes we do not know certain bits of information when we are creating a DockerFile, this can be information we need during the build of a image or even at runtime of the image. For these type of information we have the possibility to include ARG and ENV in our DockerFile so the missing piece of information can be added when we are aware of it.

- The ARG keyword is only used during the build of the image. It allows us to tweak the resulting image to a specific use case/environment.

- The ENV keyword is be used during the build of the image AND ar runtime of the container create from the image.

The usage of both these keywords can be done with a simple declaration of the variable in the DockerFile, and can be supplied with a default value if needed.

FROM microsoft/dotnet:2.1-runtimeARG BUILDTIME_ARGUMENT # Build fails when no value provided at docker buildARG BUILDTIME_ARGUMENT_WITH_DEFAULT=ENV RUNTIME_ARGUMENT=$env:BUILDTIME_ARGUMENTWORKDIR /appCOPY ./ENTRYPOINT ["dotnet", "dotnetapp.dll"] |

FROM openjdk:10-slimARG BUILDTIME_ARGUMENT # Build fails when no value provided at docker buildARG BUILDTIME_ARGUMENT_WITH_DEFAULT=ENV RUNTIME_ARGUMENT=$BUILDTIME_ARGUMENTWORKDIR /usr/src/myappCOPY ./ENTRYPOINT ["java", "Main"] |

Sensitive information

Do not use any sensitive information in ARG variables, since they can be inspected via ‘docker history‘ command

To use these arguments we need to supply them with the build command, this can be done in the following fashion:

docker build --build-arg = docker build -t --build-arg = --build-arg = |

And to use the environment variable we need to supply those when we actually create/run the container itself, like we did with our MS SQL example we used before:

docker create -e '=' docker run -e '=' -e '=' docker run -e '' docker run --env-file= # Where environment_file is a key/value list of all environment variables you want to pass along. |

Command execution

Sometimes we need to execute one or more command during the build of the container. This can be done with the usage of the RUN keyword, allowing us to execute a command with all of the arguments we required, just like we would do on a regular CLI. (Of course the command itself must be provided by the image you are currently using based on the FROM keyword.)

FROM microsoft/dotnet:2.1-sdk# Copy csproj files to perform a restoreWORKDIR /appCOPY src/<project_dir>/*.csproj ./<project_dir>COPY src/<project_dependency_dir>/*.csproj ./<project_dependency_dir># Perform the restoreWORKDIR /app/<project_dir>RUN dotnet restore# Copy the source codeWORKDIR /appCOPY src/<project_dir>/. ./<project_dir>COPY src/<project_dependency_dir>/. ./<project_dependency_dir>COPY src/SolutionItems ./SolutionItems# Perform a buildWORKDIR /app/<project_dir>RUN dotnet publish -c $env:environment -o out# Start the applicationENTRYPOINT ["dotnet", "<project>.dll"] |

FROM openjdk:10-slim# Install maven to build our project withRUN apt-get update -y && apt-get install maven -y# Copy source and POM fileWORKDIR /usr/src/appCOPY src/ ./src/ COPY pom.xml ./# Execute maven clean and packageRUN mvn -f ./pom.xml clean packageENTRYPOINT ["java","-jar","/usr/app/<application_name>.jar"] |

Volume declarations

During the creation of a image we can declare a VOLUME which can later be used used with a mount. This allow the creator of the image to provide a number of hooks for the user of the container build to work with. When declaring a VOLUME make sure you have done all manipulation on the files which reside in the exposed VOLUME, because after declaration of the volume any changes to files belong to the VOLUME will be ignored!

FROM debian:wheezyRUN useradd fooVOLUME /dataRUN touch /data/xRUN chown -R foo:foo /data |

FROM debian:wheezyRUN useradd fooRUN mkdir /data && touch /data/xRUN chown -R foo:foo /dataVOLUME /data |

Large data volumes

Volumes are initialized when a container is created. If the container’s base image contains data at the specified mount point, that existing data is copied into the new volume upon volume initialization. This means if you define a VOLUME on a directory which contains a large amount of data this copy can take a long while when creating your container! So be careful which data you expose via VOLUME.

Multistage builds

Multistage build allow us to perform a build of a project in a specific container with all required tools and then downsize the requirements to run the actual artefact with the runtime version without special dependencies which where needed to build the project. This can be done by using multiple FROM statements for which only the last FROM will be used for the image which is being created.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 | ## Build stage: build and compile ASP.net application#FROM microsoft/dotnet:2.1-sdk AS build# Setting default build environment to LOCAL, other options are: PPW, PROD and TESTARG environment=LOCAL# Setting target platform to AnyCPU, other options are: x64, x86ARG platform=AnyCPU# Copy csproj files to perform a restoreWORKDIR /appCOPY src/<project_dir>/*.csproj ./<project_dir>COPY src/<project_dependency_dir>/*.csproj ./<project_dependency_dir># Perform the restoreWORKDIR /app/<project_dir>RUN dotnet restore# Copy the source codeWORKDIR /appCOPY src/<project_dir>/. ./<project_dir>COPY src/<project_dependency_dir>/. ./<project_dependency_dir>COPY src/SolutionItems ./SolutionItems# Perform a buildWORKDIR /app/<project_dir>RUN dotnet publish -c $env:environment -o out## Runtime stage: serve ASP.net application on port 80#FROM microsoft/dotnet:2.1-runtime AS runtime# Setting default build environment to LOCAL, other options are: PPW, PROD and TESTARG environment=LOCAL# Setting environmentENV ASPNETCORE_ENVIRONMENT=$environmentENV ASPNETCORE_URLS=http://*:80# Expose port 80EXPOSE 80# Copy and run the applicationWORKDIR /appCOPY --from=build /app/<project_direct>/out/ ./ENTRYPOINT ["dotnet", "<project>.dll"] |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | ## Build stage: build and compile java application (with maven)#FROM maven:3.5-jdk-10-slim AS build# Copy source and POM fileWORKDIR /usr/src/appCOPY src/ ./src/ COPY pom.xml ./# Execute maven clean and packageRUN mvn -f ./pom.xml clean package## Runtime stage: serve Java application on port 80#FROM openjdk:10-slim AS runtime# Expose port 80EXPOSE 80# Copy and run the applicationCOPY --from=build /usr/src/app/target/<application_name>.jar /usr/app/<application_name>.jar ENTRYPOINT ["java","-jar","/usr/app/<application_name>.jar"] |

Image metadata

A image can be created with a bunch of metadata attached to it, providing information about the image of the creator of the image. All of this metadata can be embedded into the DockerFile with the use of the LABEL keyword. The usage of this keyword is pretty straightforward, just provide one or more key and value pairs to it.

LABEL <key>=<value> <key>=<value> <key>=<value> ...LABEL "com.example.vendor"="ACME Incorporated"LABEL com.example.label-with-value="foo"LABEL version="1.0"LABEL description="This text illustrates \that label-values can span multiple lines." |

DockerFile reference

If you want additional information about the command we use, you can always visit https://docs.docker.com/engine/reference/builder/ this page contains all command and more including a more details in-depth explanation of them.

Some images on the docker hub can also expose their DockerFile, so looking into those you can get some information about how a image is being created on their end. It is also handy to get a feeling on how to create a DockerFile. So do look at some of those public DockerFile, they do give you some insights on what is possible. But the reference itself is complete, but takes time to read ![]()

Compose images together to form a stack

Installation

Before we can start we need to follow the documentation on how to install docker compose, since it is not by default installed, so we head over to https://docs.docker.com/compose/install/ for the installation procedure and follow the instructions for your platform.

Compose with several existing images

Once we have our the compose command installed, we can start with the creation of a stack by creating our compose YML file, this can be done in the form of:

version: '3.6' # Depending on docker engine you have and which features you want available, use highest possible.# Definition of services (or containers to use)services: sql-server: # Self chosen name for a SQL backend image: microsoft/mssql-server-linux # Image to use environment: # Environment parameters as a list - ACCEPT_EULA=Y - SA_PASSWORD=Qwerty_123! - VIRTUAL_PORT=8080 ports: # Ports to expose on host machine - "1433:1433" rest-api:# Self chosen name for a API image: ports: - "9900:9900" depends_on: #If a container needs a other container to be active before it can perform it's job - sql-server ui: # Self chosen name for a frontend image: ports: - "80:80" depends_on: - rest-api |

This docker-compose.yml (default name used most of the time) file would create 3 containers for use based on the 3 existing images: the microsoft SQL server image, and 2 custom images for the API backend and the UI frontend. Each of their ports which have been EXPOSE‘d are mapped to the host machine their respective port.

Starting a docker-compose stack can be done by executing the docker-compose with the up command in the directory where our docker-compose.yml file resides.

# up can be used, which creates and starts the services for use in a single godocker-compose up# Or we can first create the services ourselves and then start themdocker-compose createdocker-compose start |

This will then create a default network for the containers to communicate on (since we did not defined a network ourselves in this example) and 3 containers. It will then execute a ‘docker run ‘ of each container with the port mapping we have provided. After all containers have started, all parts of the application can be reached on the host through their respective ports.

Once we do not need the containers anymore we can destroy the entire compose with the down command, which will remove the network and the 3 containers for us. If we want to keep the containers around for more usage later on we can just ask for a stop, which will keep everything around for a new start later on.

# Docker down can be used, which stops and destroys the containers (services) defineddocker-compose down# Or we can use just stop all the services, but keep their containers arounddocker-compose stop |

Compose with custom builds

But what if we do not have a exact image available to create our stack from, or we just want to do a couple of tweaks to a existing image before we use it. Must we have a image available to use in a stack? No, we can actually also build a image for use in a compose stack. This can be done by replacing in the following manner:

version: '3.6' # Depending on docker engine you have and which features you want available, use highest possible.# Definition of services (or containers to use)services: sql-server: # Self chosen name image: microsoft/mssql-server-linux # Image to use container_name: my-compose-stack-sql # Custom name for the container/service env_file: - sql-variables.env # File containing all SQL server ENV parameters (ACCEPT_EULA, SA_PASSWORD, ...) links: - "db,database" # host aliases to reach the sql-server image with on the backend network volumes: - logvolume-sql:/var/log networks: - backend # Backend network, for separate between SQL/API and API/UI rest-api: build: ./api # Sub directory 'api' containing a Dockerfile to build a custom image from. container_name: my-compose-stack-api # Custom name for the container being build depends_on: - sql-server links: - "api,backend,rest" # host aliases to reach the 'rest-api' image with on the backend and frontend network volumes: - logvolume-api:/var/log networks: - backend - frontend ui: build: context: ./ui # Sub directory 'ui' containing a Dockerfile to build a custom image from. args: # Custom build args to use with the UI Dockerfile - build_number: 1 - some_build_argument: - some_other_flag: "true" # Boolean values must be enclosed in quotes labels: - com.example.description: "Our super UI" - com.example.department: "Custom software development" - com.example.label-with-empty-value: "" container_name: my-compose-stack-ui # Custom name for the container being build ports: - "80:80" depends_on: - rest-api links: - "ui,frontend,app" # host aliases to reach the 'ui' image with on the frontend network volumes: - logvolume-ui:/var/log networks: - frontend# Volume definition for the entire stackvolumes: logvolume-sql: {} logvolume-api: {} logvolume-ui: {}# Definition of network used by servicesnetworks: frontend: driver: bridge # Default driver, makes a 'bridge' between the container network and the docker network 'frontend'. backend: driver: none # Disables external network from the container, so not accessible from the host it self, so 1433 is not exposed. |

This compose file will now build a custom image of our API and UI service based on a Dockerfile it finds in the api and ui subdirectories (from the compose file) and will first execute a docker build with those Dockerfile and then start the containers based on those newly backed images.

We also split up the networking of our images that instead of being a single network, we now have 2 networks available, a backend and a frontend network, whereby our api service is present on both networks. This to show that we can isolate our services from each other on a network level so the UI cannot directly reach the backend.

We also no longer have access from the host to our database and api, since we no longer expose those ports in our stack. Only port 80 is now available to our host, and potentially external clients.

Using compose to create a Docker Swam

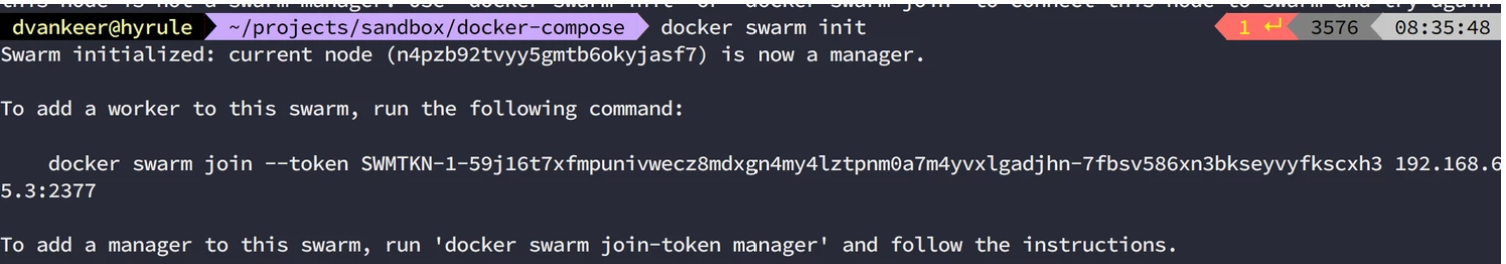

Compose file can be used to build a docker swarm. This can be done by first initialising your docker engine as a swarm manager with the following command:

docker swarm init |

This will output a token which can be used to add additional nodes to the swarm. Once your swam is initialised you can then deploy your stack to it by

docker stack deploy --compose-file docker-compose.yml |

This will create all services which are mentioned in your compose file and will take into account the additional deploy configuration mentioned in the file to see how many instances of a certain service need to be available on the swarm

services: : deploy: replicas: 2 # 2 running container on the swarm of the service update_config: parallelism: 2 # Update all at the same time restart_policy: condition: on-failure # Restart if a container fails to respond |

Docker provides a nice tutorial on how to build your first swam. If you are interested please, take a look at https://github.com/docker/labs/blob/master/beginner/chapters/votingapp.md. This tutorial guides you in building your first swarm.

Some more Docker stuff

- Machine: https://github.com/StefanScherer/windows-docker-machine for creation of a windows docker machine, to execute build and run container from a linux/mac

- Network: https://docs.docker.com/network/

Microcontainers

Container clusters

Microsoft .Net microservices (with Docker)

Dit artikel werd geschreven door David Van Keer